A US company aims to take the lead in artificial intelligence and other industries, having developed a model capable of controlling humanoid robots

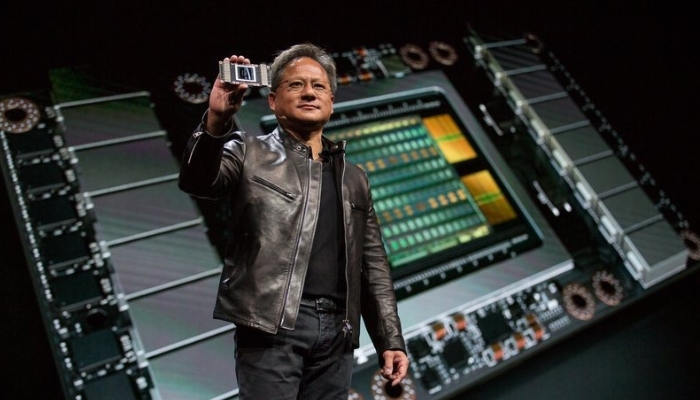

Chipmaker Nvidia has strengthened its position in artificial intelligence by introducing a new “superchip,” along with a quantum computing service and a suite of tools for developing general-purpose humanoid robotics. This article examines Nvidia’s recent advancements and their potential implications.

What is Nvidia doing?

The highlight of the company’s annual developer conference on Monday was the unveiling of the “Blackwell” series of AI chips. These chips are designed to power the highly expensive data centers used to train cutting-edge AI models, including the latest iterations of GPT, Claude, and Gemini.

The Blackwell B200, one of the chips introduced, represents a significant advancement over the company’s existing H100 AI chip. Nvidia stated that training a large AI model like GPT-4 currently requires around 8,000 H100 chips and consumes 15 megawatts of power, which is sufficient to power approximately 30,000 typical British homes.

With the new chips, the same training process would only require 2,000 B200s and 4MW of power. This could result in a reduction in electricity consumption by the AI industry or enable the same amount of electricity to be used to train much larger AI models in the future.

What qualities distinguish a chip as ‘super’?

In addition to the B200, the company introduced another component of the Blackwell series – the GB200 “superchip.” This superchip integrates two B200 chips onto a single board, along with the company’s Grace CPU. According to Nvidia, this configuration provides “30x the performance” for server farms that operate chatbots like Claude or ChatGPT, rather than training them. The system also promises to reduce energy consumption by up to 25 times.

By consolidating all components onto a single board, efficiency is improved by minimizing the communication time between chips. This allows the chips to allocate more processing time to performing the calculations that enable chatbots to function smoothly – or, at the very least, to converse.

What if I desire something larger?

Nvidia, valued at over $2 trillion (£1.6 trillion), is more than willing to accommodate such requests. Consider their GB200 NVL72: a single server rack equipped with 72 B200 chips interconnected by nearly two miles of cabling. Still not enough? You might be interested in the DGX Superpod, which consolidates eight of these racks into a single shipping-container-sized AI data center in a box. Pricing details were not disclosed at the event, but it’s safe to assume that if you have to inquire, it’s beyond your budget. Even the previous generation of chips cost a hefty $100,000 each or so.

What about my robotic companions?

Nvidia’s Project GR00T, possibly inspired by Marvel’s arboriform alien character, is a new foundation model designed for controlling humanoid robots. Just like GPT-4 for text or StableDiffusion for image generation, foundation models serve as the core AI models upon which specific applications are built. They are costly to create but serve as the driving force behind further innovation, as they can be fine-tuned for specific purposes in the future.

Utilizing this foundation model, Nvidia aims to enable robots to comprehend natural language and replicate human movements by observing actions. This capability allows them to quickly acquire coordination, dexterity, and other essential skills necessary for navigating, adapting, and interacting with the real world.

GR00T is complemented by another Nvidia technology, Jetson Thor, a system-on-a-chip explicitly created to serve as a robot’s central processing unit. The overarching objective is to develop an autonomous machine capable of receiving instructions in natural human language to perform various general tasks, even those it hasn’t been specifically programmed for.

Quantum?

One of the few trending sectors where Nvidia isn’t actively involved is quantum cloud computing. While this technology continues to be at the forefront of research, it has already been integrated into services from Microsoft and Amazon. Now, Nvidia is entering the field as well.

However, Nvidia’s cloud service will not be directly linked to a quantum computer. Instead, the service will use its AI chips to simulate a quantum computer. This approach aims to enable researchers to test their ideas without the cost of accessing the real (which are rare and expensive). Nvidia plans to offer access to third-party quantum computers through the platform in the future.